How faculty are tackling the complexities of artificial intelligence

In recent months, artificial intelligence, or AI, has seized the culture’s attention. New, widely available tools allow computers to “create” art (however ethically dubious that process may be); and what are called large language models allow programs like ChatGPT to “write” emails, papers, stories, and so much more. The implications are radical, one New Yorker writer says: “Bodies of knowledge and skills that have traditionally taken lifetimes to master are being swallowed at a gulp.” The tool has already affected all the disciplines FIT teaches—and teaching itself.

Last year, the FIT faculty senate formed an ad hoc committee with 20 faculty members, charged with making recommendations for the use of AI technology and identifying resources needed to support its development. “We want to be prudent, but also current,” says Jeffrey Riman, Center for Excellence in Teaching coordinator and co-chair of the committee. Hue asked members of the committee and others at FIT about how they are grappling with this revolutionary technology.

Subhalakshmi Gooptu

Assistant professor, English and Communication Studies

My students and I have conversations about how we can use AI at different stages of the writing process, while still being critical of its outputs and impact. They use it for their initial brainstorming and less for revision because they’re skeptical of how it sounds and they value their own voices. For a writing teacher, that’s the biggest win.

C.J. Yeh

Professor, Advertising and Digital Design

A lot of people are wowed by these photo-like images that AI can create, but I personally am more interested in how it can help us achieve what we want to do in more interesting and efficient ways. We can use AI to test out ideas quickly or create a storyboard without spending hours in Photoshop. Fewer junior coders and designers will get jobs because basic coding and design production can be done quickly with AI. But on the plus side, designers and creatives in the future will be a lot more powerful: You will be able to explore and execute what you want much faster.

Jeffrey Riman

Coordinator, Center for Excellence in Teaching; co-chair, SUNY Fact2 Task Group on Optimizing Artificial Intelligence in Higher Education; Co-chair, FIT ad-hoc committee on AI

I’m less worried about students cheating because there’s no reliable way for us to detect AI at this time. Instead, let’s focus on elevating the work of our students using AI. Companies want to hire students with some education in how to use AI instead of people who are uninformed about the risks of misuse.

Katelyn Burton Prager

Assistant professor, English and Communication Studies; Co-chair, FIT ad-hoc committee on AI

My students are allowed to use it, but they must disclose their use and be held accountable for its inaccuracies or hallucinations if they fail to verify the information with other sources. I’m trying to treat AI just like I would other digital tools. I know students are using Google and Wikipedia. I know they’re using Grammarly. My job is to teach them the strengths and weaknesses of these tools, the ways they can be beneficial and what they lose when they use AI to circumvent the critical-thinking process.

Amy Werbel

Art History and Museum Professions, History of Art; president, Faculty Senate

In my online courses, I started getting homework responses that were not good at all. In the class discussion forum, you could see students using the chatbot in their replies. It didn’t sound like the human beings I’d been teaching. But now we’re getting to a point where it’s much harder to detect.

I use an “appealing to their better angels” approach. I’m clear about the value of understanding history and speaking to each other with integrity. If you’re having a bot reply to your classmate, that’s not a conversation I want to be in. It’s like, “Let’s be human together.”

Sonja Chapman

Associate professor, International Trade and Marketing for the Fashion Industries

I don’t believe AI should be used to replace your own thinking, but it can enhance your thinking. Students used an AI chatbot for a practicum assignment. They put their topic in and got some suggested outlines for their papers and then went back to their classmates to ask what they thought. The most advanced students said the AI outline was too simplified. For the others, it provided robust guidance. But they still had to do research and fill in the details. If students use the technology properly, they’ll probably do more work than they would have without it, but it does give them ideas that weren’t at the forefront of their consciousness.

Michael Ferraro

Executive director, DTech

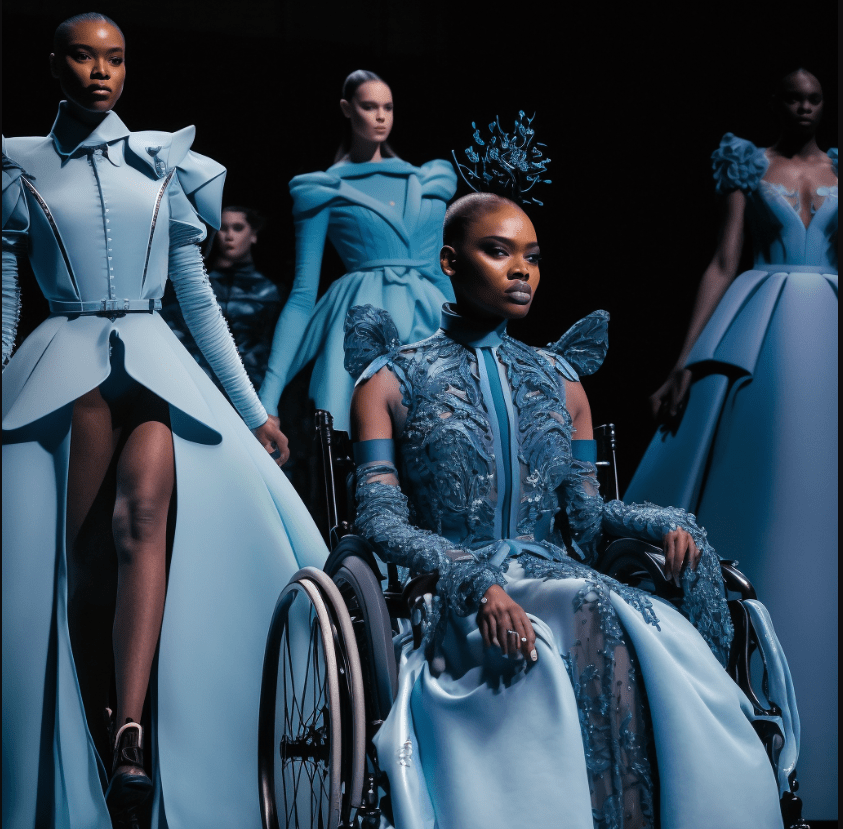

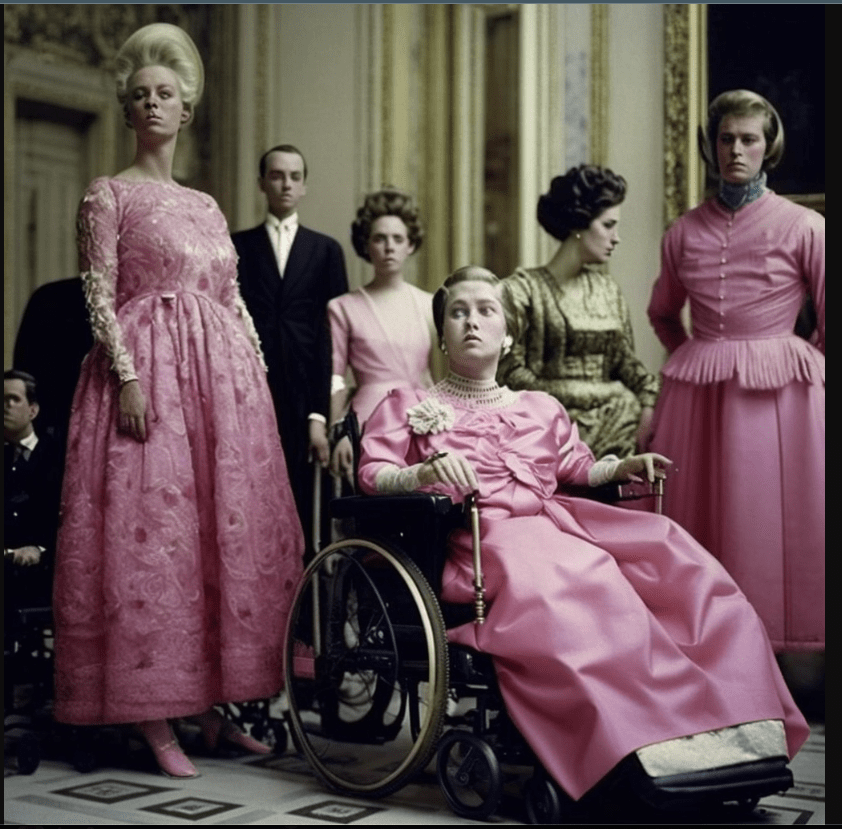

We worked with Amy Sperber, assistant professor of Fashion Design, on a project sponsored by HSBC addressing the lack of disability representation in the metaverse. Using Midjourney, a cutting-edge AI, we generated aspirational images featuring a diverse community of individuals with disabilities in stylish fashion settings. The images could be curated for a virtual or physical exhibition.

DTech hosted an AI symposium on March 21, exploring the multifaceted landscape of AI and its impact on the creative industries. Fashion icon Norma Kamali ’65 spoke about integrating AI into her creative process, and we showcased works from generative AI agency Maison Meta’s AI Fashion Week.

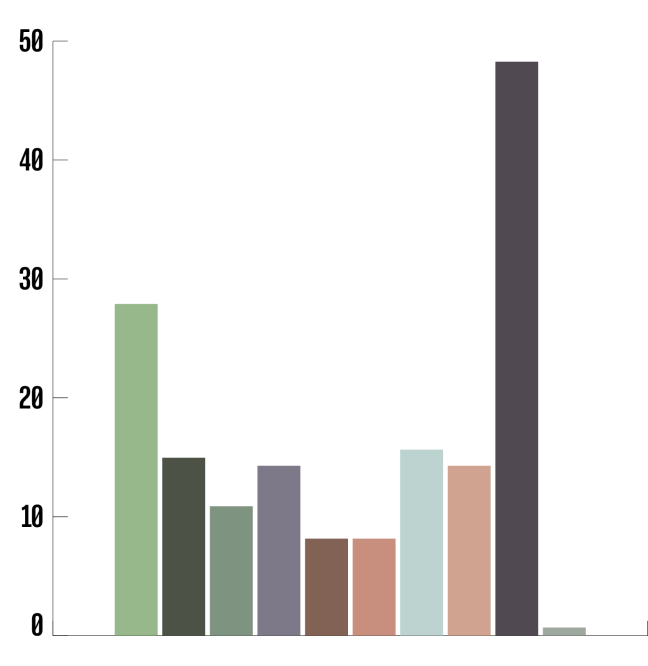

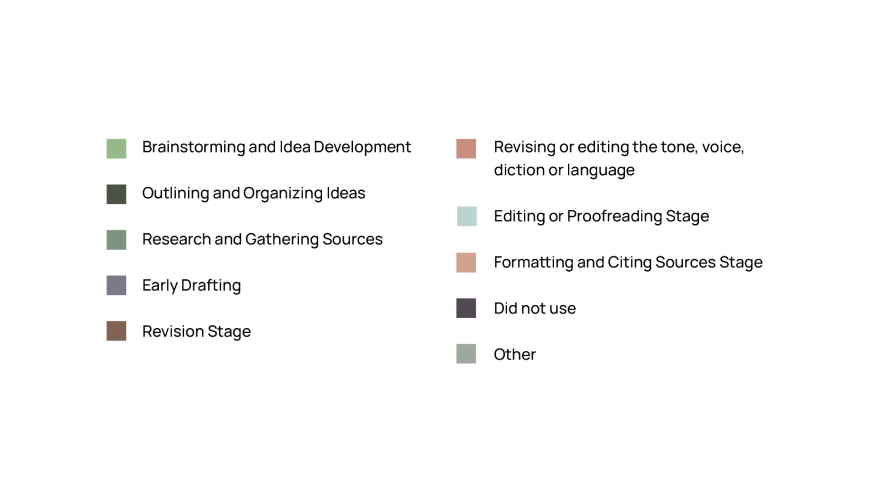

HOW MANY STUDENTS USE AI?

As part of an assessment conducted between the fall 2023 and spring 2024 semesters, all FIT students in writing classes were asked how they used generative AI to assist their work. About 150 students responded.

BOT OF DARKNESS

If the protagonist of Joseph Conrad’s masterpiece were AI, what would he, er, it say?

This semester, Calvin Williamson, professor of Mathematics, is teaching a class in large language models (LLMs), one of which is ChatGPT. As a playful exercise, he uploaded the text of Joseph Conrad’s classic novella Heart of Darkness (1899) to another LLM, an AI “assistant” named Claude. With the text as a guide, Claude answered Williamson’s questions as if it were Conrad’s protagonist, Marlow. In the story, Marlow travels into the unmapped center of an unnamed African country to find a missing ivory trader named Kurtz, who may have gone insane. The novella is frequently interpreted as a metaphor for colonialism—perfect for this moment, when power dynamics between AI and human beings are being sorted. In the end, will we be in control, or the bots?

Following is a short excerpt from that “conversation.”

WILLIAMSON: Do you believe we all have our own heart of darkness?

“MARLOW,” AKA CLAUDE: Unfortunately, I fear the answer is yes. No matter how civilized we fancy ourselves, each man and woman has an abyss inside. Most manage to keep it locked up, but knock away the shackles of society and it comes clawing out eventually.

WILLIAMSON: Did going on this trip make you face your own particular heart of darkness?

MARLOW: I’d like to believe my soul is not as black as Kurtz’s became. But his fall did make me take account of my own weaknesses and flaws in a more honest light. Strip away the rules that bind us, and how long till any man descends to savagery?